5. Geo Services Guide¶

This guide covers the details of geo data management and retrieval in rasdaman. The rasdaman Array DBMS is domain agnostic; the specific semantics of space and time is provided through a layer on top of rasdaman, historically known as petascope. It offers spatio-temporal access and analytics through APIs based on the OGC data standard Coverage Implementation Schema (CIS) and the OGC service standards Web Map Service (WMS), Web Coverage Service (WCS), and Web Coverage Processing Service (WCPS).

Note

While the name petascope addresses a specific component we frequently use the name rasdaman to refer to the complete system, including petascope.

5.1. OGC Coverage Standards Overview¶

For operating rasdaman geo services as well as for accessing such geo services through these APIs it is important to understand the mechanics of the relevant standards. In particular, the concept of OGC / ISO coverages is important.

In standardization, coverages are used to represent space/time varying phenomena, concretely: regular and irregular grids, point clouds, and general meshes. The coverage standards offer data and service models for dealing with those. In rasdaman, focus is on multi-dimensional gridded (“raster”) coverages.

In rasdaman, the OGC standards WMS, WCS, and WCPS are supported, being reference implementation for WCS. These APIs serve different purposes:

WMS delivers a 2D map as a visual image, suitable for consunmption by humans

WCS delivers n-D data, suitable for further processing and analysis

WCPS performs flexible server-side processing, filtering, analytics, and fusion on coverages.

These coverage data and service concepts are summarized briefly below. Ample material is also available on the Web for familiarization with coverages (best consult in this sequence):

hands-on demos for multi-dimensional coverage services provided by Constructor University;

a series of webinars and tutorial slides provided by EarthServer;

a range of background information on these standards provided by OGC;

the official standards documents maintained by OGC:

5.1.1. Coverage Data¶

OGC CIS specifies an interoperable, conformance-testable coverage structure independent from any particular format encoding. Encodings are defined in OGC in GML, JSON, RDF, as well as a series of binary formats including GeoTIFF, netCDF, JPEG2000, and GRIB2).

By separating the data definition (CIS) from the service definition (WCS) it is possible for coverages to be served throuigh a variety of APIs, such as WMS, WPS, and SOS. However, WCS and WCPS have coverage-specific functionality making them particularly suitable for flexible coverage acess, analytics, and fusion.

5.1.2. Coverage Services¶

OGC WMS delivers 2D maps generated from styled layers stacked up. As such, WMS is a visualization service sitting at the end of processing pipelines, geared towards human consumption.

OGC WCS, on the other hand, provides data suitable for further processing (including visualization); as such, it is suitable also for machine-to-machine communication as it appears in the middle of longer processing pipelines. WCS is a modular suite of service functionality on coverages. WCS Core defines download of coverages and parts thereof, through subsetting directives, as well as delivery in some output format requested by the client. A set of WCS Extensions adds further functionality facets.

One of those is WCS Processing; it defines the ProcessCoverages request

which allows sending a coverage analytics request through the WCPS

spatio-temporal analytics language. WCPS supports extraction, analytics, and

fusion of multi-dimensional coverage expressed in a high-level, declarative, and

safe language.

5.2. OGC Web Services Endpoint¶

Once the petascope geo service is deployed (see rasdaman installation

guide) coverages can be accessed through the HTTP

service endpoint /rasdaman/ows.

For example, assuming that the service IP address is 123.456.789.1 and the

service port is 8080, the following request URLs would deliver the

Capabilities documents for OGC WMS and WCS, respectively:

http://123.456.789.1:8080/rasdaman/ows?SERVICE=WMS&REQUEST=GetCapabilities&VERSION=1.3.0

http://123.456.789.1:8080/rasdaman/ows?SERVICE=WCS&REQUEST=GetCapabilities&VERSION=2.0.1

Text responses, e.g. for GetCapabilities request, will be compressed for faster download

if the client sends the request with HTTP header Accept-Encoding: gzip.

This is normally automatically done by the browser, but may need to be

explicitly handled when developing clients or from using command-line tools.

5.3. OGC Coverage Implementation Schema (CIS)¶

A coverage consists mainly of:

domain set: provides information about where data sit in space and time. All coordinates expressed there are relative to the coverage’s Coordinate Reference System or Native CRS. Both CRS and its axes, units of measure, etc. are indiciated in the domain set. Petascope currently supports grid topologies whose axes are aligned with the axes of the CRS. Along these axes, grid lines can be spaced regularly or irregularly.

range set: the “pixel payload”, ie: the values (which can be atomic, like in a DEM, or records of values, like in hyperspectral imagery).

range type: the semantics of the range values, given by type information, null values, accuracy, etc.

metadata: a black bag which can contain any data: the coverage will not understand these, but duly transport them along so that the connection between data and metadata is not lost.

Further components include Envelope which gives a rough, simplified overview

on the coverage’s location in space and time and CoverageFunction which is

unused by any implementation known to us.

5.3.1. Coverage CRS¶

Every coverage, as per OGC CIS, must have exactly one native Coordinate Reference System (CRS), which is given by a URL. Resolving this URL should deliver the CRS definition. The OGC CRS resolver is an example of a public service for resolving CRS URLs; the same service is also bundled in every rasdaman installation, so that it is avialable locally. More details on this topic can be found in the CRS Management chapter.

Sometimes definitions for CRSs are readily available, such as the 2-D WGS84 with code EPSG:4326 in the EPSG registry. In particular spatio-temporal CRSs, however, are not always readily available, at least not in all combinations of spatial and temporal axes. To this end, composition of CRS is supported so that the single Native CRS can be built from “ingredient” CRSs by concatenating them into a composite one. For instance, a time-series of WGS84 images would have the following Native CRS:

http://localhost:8080/def/crs-compound?

1=http://localhost:8080/def/crs/OGC/0/AnsiDate

&2=http://localhost:8080/def/crs/EPSG/0/4326

Coordinate tuples in this CRS represent an ordered composition of a temporal

coordinate expressed in ISO 8601 syntax, such as 2012-01-01T00:01:20Z,

followed by latitude and longitude coordinates, as per EPSG:4326.

The native CRS of a coverage domain set can be determined in severay ways:

in a WCS

GetCapabilitiesresponse, thewcs:CoverageSummary/ows:BoundingBox@crsattribute;in a WCS

DescribeCoverageresponse, thesrsNameattribute in thegml:domainSetelement; Furthermore, theaxisLabelsattribute contains the CRS axis names according to the CRS sequency, and theuomLabelsattribute contains the units of measure for each corresponding axis.in WCPS, the function

crsSet(e)returns the CRS of a coverage expression e;

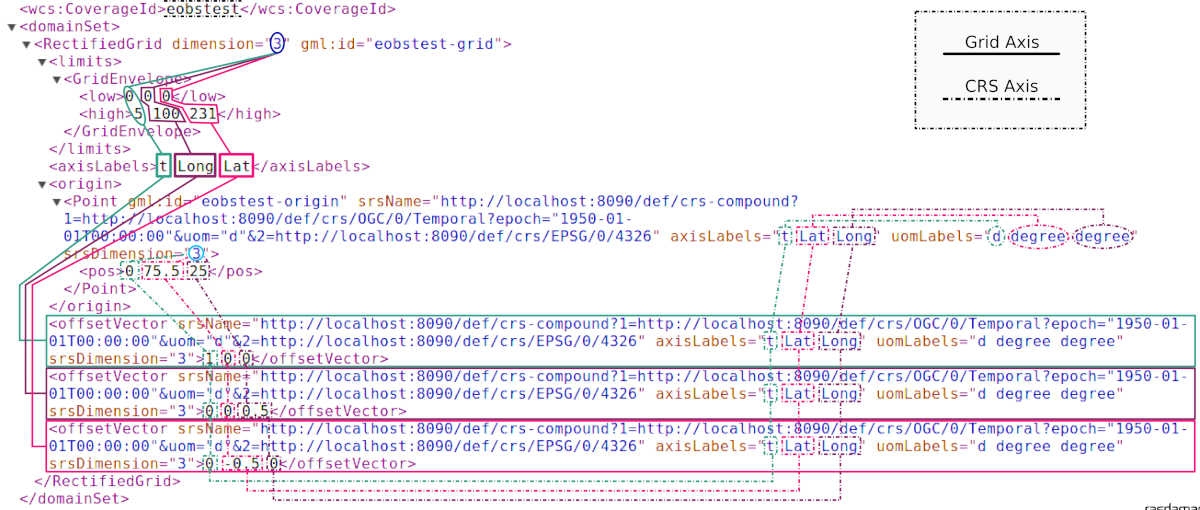

The following graphics illustrates, on the example of an image timeseries,

how dimension, CRS, and axis labels affect the domain set in

a CIS 1.0 RectifiedGridCoverage.

Note

This handling of coordinates in CIS 1.0 bears some legacy burden from GML;

in the GeneralGridCoverage introduced with CIS 1.1 coordinate handling is

much simplified.

5.3.2. Range Type¶

Range values can be atomic or (possibly nested) records over atomic values, described by the range type. In rasdaman the following atomic data types are supported; all of these can be combined freely in records of values, such as in hyperspectral images or climate variables.

rasdaman type |

size |

Quantity types |

|---|---|---|

|

8 bit |

unsignedByte |

|

8 bit |

signedByte |

|

8 bit |

unsignedByte |

|

16 bit |

signedShort |

|

16 bit |

unsignedShort |

|

32 bit |

signedInt |

|

32 bit |

unsignedInt |

|

32 bit |

float32 |

|

64 bit |

float64 |

|

64 bit |

cfloat32 |

|

128 bit |

cfloat64 |

5.3.3. Nil Values¶

Nil (null) values, as per SWE, are supported by rasdaman in an extended way:

null values can be defined over any data type

nulls can be single values

nulls can be intervals

a null definnition in a coverage can be a list of all of the above alternatives.

Full details can be found in the null values section.

Note

It is highly recommended to NOT define single null values over floating-point data as this causes numerical problems well known in mathematics. This is not related to rasdaman, but intrinsic to the nature and handling of floating-point numbers in computers. A floating-point interval around the desired float null value should be preferred (this corresponds to interval arithmetics in numerical mathematics).

5.3.4. Errors¶

Errors from OGC requests to rasdaman are returned to the client formatted as

ows:ExceptionReport (OGC Common Specification).

An ExceptionReport can contain multiple Exception elements.

For example, when running a WCS GetCoverage or a WCPS query which execute

rasql queries in rasdaman, in case of an error the ExceptionReport will contain

two Exception elements:

One with the error message returned from rasdaman.

Another with the rasql query that failed.

For example:

<ows:ExceptionReport>

<ows:Exception exceptionCode="RasdamanRequestFailed">

<ows:ExceptionText>The Encode function is applicable to array arguments only.</ows:ExceptionText>

</ows:Exception>

<ows:Exception exceptionCode="RasdamanRequestFailed">

<ows:ExceptionText>Failed internal rasql query: SELECT encode(1, "png" ) FROM mean_summer_airtemp AS c</ows:ExceptionText>

</ows:Exception>

</ows:ExceptionReport>

5.4. OGC Web Coverage Service¶

WCS Core offers the following request types:

GetCapabilitiesfor obtaining a list of coverages offered together with an overall service description;DescribeCoveragefor obtaining information about a coverage without downloading it;GetCoveragefor downloading, extracting, and reformatting of coverages; this is the central workhorse of WCS.

WCS Extensions in part enhance GetCoverage with additional functionality

controlled by further parameters, and in part establish new request types,

such as:

WCS-T defining

InsertCoverage,DeleteCoverage, andUpdateCoveragerequests;WCS Processing defining

ProcessCoveragesfor submitting WCPS analytics code.

You can use http://localhost:8080/rasdaman/ows as service endpoints to which to

send WCS requests, for example:

http://localhost:8080/rasdaman/ows?service=WCS&version=2.0.1&request=GetCapabilities

Examples to send GetCoverage requests with curl client as

HTTP GET and POST to petascope:

5.4.1. Subsetting behavior¶

This section describes how rasdaman translates geo-referenced coordinates (e.g. in spatio-temporal subsets) to internal grid coordinates, which are required in the internal rasql queries generated for each geo request.

This is particular to regular geo axes of a coverage. The behavior for irregular axes is explained here.

5.4.1.1. Terminology¶

General

geo axis - a geo-referenceable axis of a coverage, e.g. Lat, Lon, time, etc.

grid axis - an axis referenceable with grid (integer) coordinates; internally geo axis coordinates have to be translated to grid integer coordinates

subset - a list of trims and/or slices

trim - a pair of lower and upper bounds indicating a range on a geo axis

slice - a single coordinate on a geo axis

Rasdaman-provided information

axis_res- geo resolution (positive or negative) on a regular axisaxis_min- minimum geo axis boundaxis_max- maximum geo axis boundaxis_grid_min- minimum grid axis bound

User-provided information

subset_min- minimum trim geo bound, or a slice coordinatesubset_max- maximum trim geo bound (none if it is a slicing operation)

5.4.1.2. Preconditions¶

Valid trims on a geo axis must fulfill the following precondition:

axis_min <= subset_min <= subset_max <= axis_max

Valid slices on a geo axis must fulfill the following preconditions:

if

axis_res > 0thenaxis_min <= subset_min < axis_maxif

axis_res < 0thenaxis_min < subset_min <= axis_max

Furthermore, for irregular axes:

Slicing: exactly one of the axis coefficients must be equal to the slice coordinate

subset_min, or a coefficient’s area of validity (if defined) must intersect it.Trimming: at least one of the axis coefficients must be within the

[subset_min, subset_max]interval, or at least one coefficient’s area of validity (if defined) must intersect it.

5.4.1.3. Geo-to-grid coordinate translation¶

Translating geo coordinates to grid coordinates on a regular axis is done with the formula below. It is assumed that the preconditions in the previous section are already satisfied.

# 1. A small value is extracted from subset_max to emulate right-open interval.

# It cannot be fixed as it eventually becomes ineffective at certain magnitudes;

# for that reason it is scaled to the resolution.

epsilon = abs(axis_res) * 1e-11

# 2. Handle Axis Y (e.g. Lat) with negative axis_res = opposite behavior:

# swap coordinates and limits; this allows to use the same formula in 3a/b

# below for both positive and negative resolution.

if axis_res < 0:

epsilon *= -1

# the origin for negative resolution is the upper subset/axis bound

swap subset_min and subset_max

swap axis_min and axis_max

# 3a. Calculate grid low bound

grid_min_tmp = (subset_min - axis_min) / axis_res

if grid_min_tmp != floor(grid_min_tmp) and grid_min_tmp < 0:

# for axis with negative resolution and grid min is a float number, it needs to be adjusted

# e.g. -5.00000000000001 should be shifted to -5 instead of -6

grid_min_tmp = grid_min_tmp + abs(epsilon)

# *floor* is used to make sure that the result is an integer grid coordinate;

# some rounding method must be used, and floor works well with left-closed interval.

# NOTE: axis_grid_min is used because the axis grid lower bound is not always 0.

grid_min = axis_grid_min + floor( grid_min_tmp )

# 3b. Calculate grid high bound

if subset_min != subset_max:

# The formula is analogous to the one above for grid_min, but

# we extract a small epsilon from subset_max to emulate right-open interval.

grid_max = axis_grid_min + floor( ((subset_max - epsilon) - axis_min) / axis_res )

# 4. Output of the formula

if subset_min != subset_max:

return (grid_min, grid_max) # trim

else:

return grid_min # slice

Example. The following subset performs slicing on the Lat and trimming

on the Lon axis of coverage $c in WCPS: $c[Lat(-89.0), Lon(0.0, 180.0)].

The formula values provided by rasdaman are as follows:

Axis

Lon:axis_res=1, axis_min=-180, axis_max=180, axis_grid_min=0Axis

Lat:axis_res=-1, axis_min=-90, axis_max=90, axis_grid_min=0

The formula would return the following grid indices:

Lat(-89.0) -> 179Lon(0.0, 180.0) -> (170, 359)

5.4.2. CIS 1.0 to 1.1 Transformation¶

Under WCS 2.1 - ie: with SERVICE=2.1.0 - both DescribeCoverage and

GetCoverage requests understand the proprietary parameter

OUTPUTTYPE=GeneralGridCoverage which formats the result as CIS 1.1

GeneralGridCoverage even if it has been imported into the server as a CIS

1.0 coverage, for example:

http://localhost:8080/rasdaman/ows?SERVICE=WCS&VERSION=2.1.0

&REQUEST=DescribeCoverage

&COVERAGEID=test_mean_summer_airtemp

&OUTPUTTYPE=GeneralGridCoverage

http://localhost:8080/rasdaman/ows?SERVICE=WCS&VERSION=2.1.0

&REQUEST=GetCoverage

&COVERAGEID=test_mean_summer_airtemp

&FORMAT=application/gml+xml

&OUTPUTTYPE=GeneralGridCoverage

5.4.3. Polygon/Raster Clipping¶

WCS and WCPS support clipping of polygons expressed in the

WKT format format.

Polygons can be MultiPolygon (2D), Polygon (2D) and LineString (1D+).

The result is always a 2D coverage in case of MultiPolygon and Polygon, and

is a 1D coverage in case of LineString.

Further clipping patterns include curtain and corridor on 3D+ coverages

from Polygon (2D) and Linestring (1D). The result of curtain

clipping has the same dimensionality as the input coverage whereas the result of

corridor clipping is always a 3D coverage, with the first axis being the

trackline of the corridor by convention.

In WCS, clipping is expressed by adding a &CLIP= parameter to the

request. If the SUBSETTINGCRS parameter is specified then this CRS also

applies to the clipping WKT, otherwise it is assumed that the WKT is in the

Native coverage CRS. In WCPS, clipping is done with a clip function, much

like in rasql.

Further information can be found in the rasql clipping section. Below we list examples illustrating the functionality in WCS and WCPS.

5.4.3.1. Clipping Examples¶

Polygon clipping on coverage with Native CRS

EPSG:4326, for example:WCS:

WCPS:

Polygon clipping with coordinates in

EPSG:3857(fromsubsettingCRSparameter) on coverage with Native CRSEPSG:4326, for example:WCS:

WCPS:

Linestring clipping on a 3D coverage with axes

X,Y,ansidate, for example:WCS:

WCPS:

Multipolygon clipping on 2D coverage, for example:

WCS:

WCPS:

Curtain clipping by a Linestring on 3D coverage, for example:

Curtain clipping by a Polygon on 3D coverage, for example:

Corridor clipping by a Linestring on 3D coverage, for example:

Corridor clipping by a Polygon on 3D coverage, for example:

Note

Subspace clipping is not supported in WCS or WCPS.

5.4.4. Areas of validity on irregular axes¶

By default, coefficients on an irregular axes act as single points: subsetting on such an axis must specify exactly the coefficient (slice), or contain the coefficient itself in the lower/upper bounds (trim).

It is possible to customize this behavior when importing data by specifying areas of validity which extend the coefficients from single points into intervals with a start and an end; this concept is also known as footprints or sample space. Refer to the corresponding import documentation on how to specify the areas of validity.

The areas of validity specify a closed interval [start, end] around each

coefficient on an irregular axis, such that start <= coefficient <= end.

Areas of validity may not overlap.

The start and end may be specified with less than millisecond precision, e.g.

"2010" and "2012-05". In this case they are expanded to millisecond

precision internally such that start is the earliest possible datetime

starting with "2010" (i.e. "2010-01-01T00:00:00.000Z") and end is the

latest possible datetime starting with "2012-05"

(i.e. "2012-05-31T23:59:59.999Z"). The same semantics applies in

subsetting in queries, see Temporal subsets.

The effect on subsetting is as follows:

slicing: a coordinate

Cwill select the coefficient with area of validity that intersectsC. For example if the coefficient is"2010"(resolved when importing in petascope as"2010-01-01T00:00:00.000Z") and its area of validity has start"2009-01-01"and end"2011-12-31", then slicing at coordinate"2009-05-01"will return the coefficient, as will at"2010-12-31", and anything else between the start and (not including) the end.trimming: an interval

lo:hiwill select all coefficients with areas of validity that intersect or are contained in the[lo,hi]interval.

If a coverage was imported with custom areas of validity, they will be listed in

the WCS DescribeCoverage response under XML element

<ras:covMetadata>. A <ras:axes> element contains <ras:axis> child

element for each coverage axis wth areas of validity which are then listed

as <ras:areasOfValidity> children with start and end attributes, e.g:

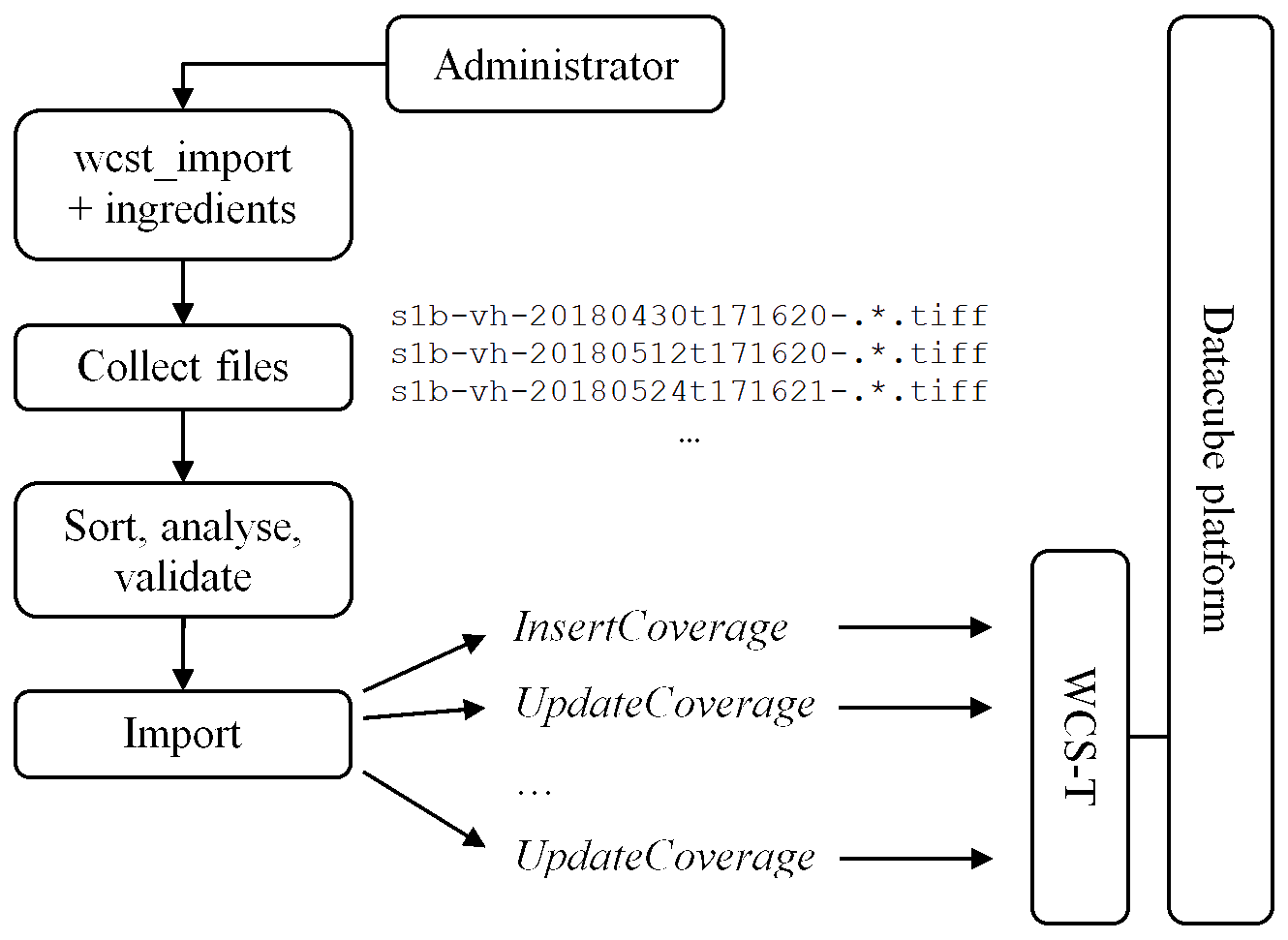

5.4.5. WCS-T¶

Currently, WCS-T supports importing coverages in GML format. The metadata of the coverage is thus explicitly specified, while the raw cell values can be stored either explicitly in the GML body, or in an external file linked in the GML body, as shown in the examples below. The format of the file storing the cell values must be

2-D data supported by the GDAL library, such as TIFF / GeoTIFF, JPEG / JPEG2000, PNG, etc;

n-D data in NetCDF or GRIB format

In addition to the WCS-T standard parameters petascope supports additional proprietary parameters, covered in the following sections.

Note

For coverage management normally WCS-T is not used directly. Rather, the more

convenient wcst_import Python tool is recommended for Data Import.

5.4.5.1. Inserting coverages¶

Inserting a new coverage into the server’s WCS offerings is done using the

InsertCoverage request.

Request Parameter |

Value |

Description |

Required |

|---|---|---|---|

SERVICE |

WCS |

service standard |

Yes |

VERSION |

2.0.1 or later |

WCS version used |

Yes |

REQUEST |

InsertCoverage |

Request type to be performed |

Yes |

INPUTCOVERAGEREF |

{url} |

URl pointing to the coverage to be inserted |

One of inputCoverageRef or inputCoverage is required |

INPUTCOVERAGE |

{coverage} |

A coverage to be inserted |

One of inputCoverageRef or inputCoverage is required |

USEID |

new | existing |

Indicates wheter to use the coverage’s id (“existing”) or to generate a new unique one (“new”) |

No (default: existing) |

Request Parameter |

Value |

Description |

Required |

|---|---|---|---|

PIXELDATATYPE |

GDAL supported base data type (eg: “Float32”) or comma-separated concatenated data types, (eg: “Float32,Int32,Float32”) |

In cases where range values are given in the GML body the datatype can be indicated through this parameter. Default: Byte. |

No |

TILING |

rasdaman tiling clause, see Storage Layout Language |

Indicates the array tiling to be applied during insertion |

No |

The response of a successful coverage request is the coverage id of the newly inserted coverage. For example: The coverage available at http://schemas.opengis.net/gmlcov/1.0/examples/exampleRectifiedGridCoverage-1.xml can be imported with the following request:

http://localhost:8080/rasdaman/ows?SERVICE=WCS&VERSION=2.0.1

&REQUEST=InsertCoverage

&COVERAGEREF=http://schemas.opengis.net/gmlcov/1.0/examples/exampleRectifiedGridCoverage-1.xml

The following example shows how to insert a coverage stored on the server on which rasdaman runs. The cell values are stored in a TIFF file (attachment:myCov.gml), the coverage id is generated by the server and aligned tiling is used for the array storing the cell values:

http://localhost:8080/rasdaman/ows?SERVICE=WCS&VERSION=2.0.1

&REQUEST=InsertCoverage

&COVERAGEREF=file:///etc/data/myCov.gml

&USEID=new

&TILING=aligned[0:500,0:500]

5.4.5.2. Updating Coverages¶

Updating an existing coverage into the server’s WCS offerings is done using the UpdateCoverage request.

Request Parameter |

Value |

Description |

Required |

|---|---|---|---|

SERVICE |

WCS |

service standard |

Yes |

VERSION |

2.0.1 or later |

WCS version used |

Yes |

REQUEST |

UpdateCoverage |

Request type to be performed |

Yes |

COVERAGEID |

{string} |

Identifier of the coverage to be updated |

Yes |

INPUTCOVERAGEREF |

{url} |

URl pointing to the coverage to be inserted |

One of inputCoverageRef or inputCoverage is required |

INPUTCOVERAGE |

{coverage} |

A coverage to be updated |

One of inputCoverageRef or inputCoverage is required |

SUBSET |

AxisLabel(geoLowerBound, geoUpperBound) |

Trim or slice expression, one per updated coverage dimension |

No |

The following example shows how to update an existing coverage test_mr_metadata

from a generated GML file by wcst_import tool:

http://localhost:8080/rasdaman/ows?SERVICE=WCS&version=2.0.1

&REQUEST=UpdateCoverage

&COVRAGEID=test_mr_metadata

&SUBSET=i(0,60)

&subset=j(0,40)

&INPUTCOVERAGEREF=file:///tmp/4514863c_55bb_462f_a4d9_5a3143c0e467.gml

5.4.5.3. Deleting Coverages¶

The DeleteCoverage request type serves to delete a coverage (consisting of

the underlying rasdaman collection, the associated WMS layer (if exists)

and the petascope metadata).

For example: The coverage test_mr can be deleted as follows:

http://localhost:8080/rasdaman/ows?SERVICE=WCS&VERSION=2.0.1

&REQUEST=DeleteCoverage

&COVERAGEID=test_mr

5.4.6. Renaming a coverage¶

The /rasdaman/admin/coverage/update non-standard API allows to update a

coverage id and the associated WMS layer if one exists (v10.0+). For example,

the coverage test_mr can be renamed to test_mr_new as follows:

http://localhost:8080/rasdaman/admin/coverage/update

?COVERAGEID=test_mr

&NEWCOVERAGEID=test_mr_new

5.4.7. Update coverage metadata¶

Coverage metadata can be updated through the interactive rasdaman WSClient on

the OGC WCS > Describe Coverage tab, by selecting a text file (MIME type must

be one of text/xml or text/plain) containing the

new metadata; Note that to be able to do this it is necessary to login first in

the Admin tab.

The non-standard API for this feature is at /rasdaman/admin/coverage/update

which operates through multipart/form-data POST requests. The request should

contain 2 parts:

the

coverageIdto update, andthe path to a local text file to be uploaded to the server.

For example, the below request will update the metadata of coverage

test_mr_metadata with the one in a local XML file at

/home/rasdaman/Downloads/test_metadata.xml by using the curl tool:

curl --form-string "COVERAGEID=test_mr_metadata"

-F "file=@/home/rasdaman/Downloads/test_metadata.xml"

"http://localhost:8080/rasdaman/admin/coverage/update"

5.4.8. Update coverage’s null values¶

Coverage’s null values can be updated via the non-standard API at

/rasdaman/admin/coverage/nullvalues/update endpoint

with two mandatory parameters:

coverageId: the name of the coverage to be updatednullvalues: null values of coverage’s band(s) with the format corresponding to rasql, see syntax doc.

Note

Value of nullvalues must be encoded in clients properly

for special characters such as: [, ], {, }.

Example of using curl tool to update null values

of a 3-bands coverage:

curl 'http://localhost:8080/rasdaman/admin/coverage/nullvalues/update'

-d 'COVERAGEID=test_rgb&NULLVALUES=[35, 25:35, 35:35]'

-u rasadmin:rasadmin

5.4.9. Update coverage range type¶

The rangeType of a coverage can be updated via the

/rasdaman/admin/coverage/rangetype/update endpoint API

with two mandatory parameters:

coverageId: the name of the coverage to be updatedrangeType: XML string describes coverage’s bands in (Sensor Web Enablement) SWE standards, with root element is<swe:dataRecord>>.

Example of using curl tool to update coverage’s range type

of a 2-bands coverage to one band as swe:Quantity and one band as swe:Category:

updated_range.xml file contains these content:

<swe:DataRecord>

<swe:field name="Band1">

<swe:Quantity definition="http://www.opengis.net/def/dataType/OGC/0/UnsignedInt">

<swe:label>Band 1 label</swe:label>

<swe:description>Band 1 description</swe:description>

<swe:nilValues>

<swe:NilValues>

<swe:nilValue reason="Null by no data">25</swe:nilValue>

</swe:NilValues>

</swe:nilValues>

<swe:uom code="10^0"/>

</swe:Quantity>

</swe:field>

<swe:field name="Band2">

<swe:Category definition="Band 2 definition which is an attribute">

<swe:description/>

<swe:nilValues>

<swe:NilValues>

<swe:nilValue reason="Null value from interval">25:35</swe:nilValue>

<swe:nilValue reason="Null value from a single value">57</swe:nilValue>

</swe:NilValues>

</swe:nilValues>

<swe:codeSpace xlink:href="http://code.list.org/"/>

</swe:Category>

</swe:field>

</swe:DataRecord>

curl -u rasadmin:rasadmin \

'http://localhost:8080/rasdaman/admin/coverage/rangetype/update' \

--data-urlencode COVERAGEID=test_cov \

--data-urlencode RANGETYPE@updated_range.xml

5.4.10. Convert irregular axis to regular axis¶

This feature allows to convert a suitable irregular axis to a regular axis, when the irregular axis has equal distances between its coefficients. Typical use case is to convert an irregular time axis with daily / hourly coefficients to regular time axis with time step (time resolution) = day / hour.

The endpoint API is /rasdaman/admin/coverage/update

with three mandatory parameters:

coverageId: name of the coverage to be updatedaxis: name of the irregular axis to be convertednewtype: only possible value isRegularAxis

Example of using the curl command-line tool to convert the axis

ansi in a coverage test_cov to a regular axis:

curl -u rasadmin:rasadmin 'http://localhost:8080/rasdaman/admin/coverage/update' \

-d "COVERAGEID=test_cov&axis=ansi&newtype=RegularAxis"

5.4.11. Update regular axis’ origin¶

This feature allows to update an existing regular axis’ origin in a coverage, which effective changes the geo lower and upper bounds of this axis. It is used in the case when the geo bounds of an regular axis are shifted to some unwanted values after coverage imported, and the admin wants to update them to proper values.

The endpoint API is /rasdaman/admin/coverage/update

with three mandatory parameters:

coverageId: name of the coverage to be updatedaxis: name of the regular axis to be updatedorigin: the new origin of the axis. It can be in ISO Datetime format (e.g."1960-12-31T12:00:00.000Z") if axis is temporal or number (e.g.23.5) in other cases.Note

New geo bounds are calculated in petascope based on the origin like below.

If axis’ resolution is positive (e.g. lon, E, X, AnsiDate,…) then:

newGeoLowerBound = origin - 0.5 * resolution

newGeoUpperBound = newGeoLowerBound + total_grid_pixels * resolution

If axis’ resolution is negative (e.g. lat, N, Y,…) then:

newGeoUpperBound = origin + 0.5 * abs(resolution)

newGeoLowerBound = newGeoUpperBound + total_grid_pixels * resolution

Example of using the curl command-line tool to update origin of axis

ansi with resolution=1 day in a coverage test_cov.

Before updating, extent of axis is: ["1960-12-31T12:00:00.000Z":"2024-06-24T12:00:00.000Z"].

After udpating, extent of axis is: ["1960-01-01T00:00:00.000Z":"2024-06-25T00:00:00.000Z"].

curl -u rasadmin:rasadmin 'http://localhost:8080/rasdaman/admin/coverage/update' \

-d 'COVERAGEID=test_cov&axis=ansi&origin="1961-01-01T12:00:00.000Z"'

5.4.12. INSPIRE Coverages¶

The INSPIRE Download Service is an implementation of the Technical Guidance for the implementation of INSPIRE Download Services using Web Coverage Services (WCS) version 2.0+.

In order to achieve INSPIRE Download Service compliance, the following enhancements

have been implemented in rasdaman for WCS GetCapabilities response.

Under

<ows:OperationsMetadata>there is a new section for INSPIRE metadata for the service. For example, the result below contains two INSPIRE coveragescov_1andcov_2.Service Metadata URL field (

<inspire_common:URL>), a URL containing the location of the metadata associated with the WCS service which is configured by settinginspire_common_urlinpetascope.properties.Under

<inspire_common:SupportedLanguages>section, the supported language is fixed toeng(English) only.A coverage is considered INSPIRE coverage, if it has a specific URL set by

metadataURL attribute. All INSPIRE coverages is listed in the list of XML elements<inspire_dls:SpatialDataSetIdentifier>. The example above contains two INSPIRE coverages, each<inspire_dls:SpatialDataSetIdentifier>element containing an attribute metadataURL to provide more information about the coverages. The value for<inspire_common:Namespace>elements of each INSPIRE coverage is derived from the service endpoint.

5.4.12.1. Create an INSPIRE coverage¶

Controlling whether a local coverage is treated as an INSPIRE coverage can be done by:

Manually sending a request to

/rasdaman/admin/inspire/metadata/updatewith two mandatory parameters:COVERAGEID- the coverage to be converted to an INSPIRE coverageMETADATAURL- a URL to an INSPIRE-compliant catalog entry for this coverage; if set to empty, i.e.METADATAURL=then the coverage is marked as non-INSPIRE coverage.

For example, the coverage

test_inspire_metadatacan be marked as INSPIRE coverage as follows:curl --user rasadmin:rasadmin -X POST \ --form-string 'COVERAGEID=test_inspire_metadata' \ -F 'METADATAURL=https://inspire-geoportal.ec.europa.eu/16.iso19139.xml' \ 'http://localhost:8080//rasdaman/admin/inspire/metadata/update'Via

wcst_import.sh, in an ingredients files with inspire section contains the settings for importing INSPIRE coverage:metadata_url- If set to non-empty string, then the importing coverage will be marked as INSPIRE coverage. If set to empty string or omitted, then the coverage will be updated as non-INSPIRE coverage.

For example, the coverage

cov_3will be imported as INSPIRE coverage with this configuration in the ingredients file:

5.4.13. Coverage thumbnail¶

Each coverage can have a thumbnail, a small PNG or JPEG image that provides a

quick visual overview of the coverage. To get the thumbnail of a coverage

<coverageId> (no credentials required):

http://localhost:8080/rasdaman/ows/coverage/thumbnail?COVERAGEID=<coverageId>

If the coverage has a thumbnail, then the image will be returned.

If the coverage has no thumbnail but has an associated WMS layer, then rasdaman will use a WMS

GetMaprequest to render the most recent temporal slice as an image of size 250 x 250. If the request has no credentials and server requires authentication, then petascope throws HTTP 404 error.Otherwise, an exception is thrown.

A thumbnail can be set by:

Add

thumbnail_pathsetting in the wcst_import ingredients file when importing a new coverage (docs)A request to the

/rasdaman/admin/coverage/thumbnail/updateAPI withCOVERAGEIDparameter for the coverage ID, and the thumbnail image for parameterTHUMBNAILGRAPHIC:# NOTE: An @ character preceding the image file path is required in order to upload its contents curl -u rasadmin:rasadmin 'http://localhost:8080/rasdaman/admin/coverage/thumbnail/update' -F 'COVERAGEID=test_wms_4326' -F 'THUMBNAILGRAPHIC=@/tmp/thumbnail.jpg'

Alternatively, the image file could be converted to a

base64text string and uploaded as follows:# NOTE: the file size that can generally be uploaded in this way # is limited to about 100 kB, depending on OS limits for command-line # argument sizes. coverage_id="test_coverage" thumbnail="/tmp/thumnail_image.png" format=$(file "$thumbnail" | grep -iEo 'jpeg|png' | head -n1 | tr '[:upper:]' '[:lower:]') base64_str=$(base64 -w 0 "$thumbnail") base64_final="data:image/$format;base64,$base64_str" api_url="http://localhost:8080/rasdaman/admin/coverage/thumbnail/update" curl -u 'rasadmin:rasadmin' "$api_url?COVERAGEID=$coverage_id" \ -d "THUMBNAILGRAPHIC=$base64_final"

In either case, it is recommended for the thumbnail to be an image of size 250x250 pixels.

5.4.14. Check if a coverage exists¶

Rasdaman offers non-standard API to check if a coverage exists in a

simpler and faster way than doing a GetCapabilities or a DescribeCoverage

request. The result is a true/false string literal.

Example:

http://localhost:8080/rasdaman/admin/coverage/exist?coverageId=cov1

5.4.15. GetCapabilities response extensions¶

The WCS GetCapabilities response contains some rasdaman-specific extensions,

as documented below.

The

<ows:AdditionalParameters>element of each coverage contains some information which can be useful to clients:sizeInBytes- an estimated size (in bytes) of the coveragesizeInBytesWithPyramidLevels- an estimated size (in bytes) of the base coverage plus sizes of its pyramid coverages; only available if this coverage has pyramidaxisList- the coverage axis labels in geo CRS order

Example:

5.4.16. DescribeCoverage rasdaman metadata¶

Rasdaman may generate specific metadata in the DescribeCoverage response for a particular coverage; this section documents the structure of this metadata.

If areas of validity were defined during the import of a coverage, then they will be listed under an

<axes>element for each affected axis. More details on the metadata structur can be found here.If global metadata was specified explicitly or harvested automatically from input files during data import (docs), it will be listed in this section with multiple elements per metadata key/value pair. Each element has a name under the ras: namespace set to the key, and text content set to the value. For example, explicit metadata in the ingredients file specified like this:

"metadata": { "global": { "title": "ERA5-Land monthly averaged data from 1950 to present", "data_type": "Gridded" } }

will appear as follows in the DescribeCoverage:

<ras:covMetadata> <ras:title>ERA5-Land monthly averaged data from 1950 to present</ras:title> <ras:data_type>Gridded</ras:data_type> </ras:covMetadata>

If a color palette table was specified explicitly or harvested automatically from input files during data import (docs), it will be listed under a

<ras:colorPaletteTable>element. An example of color palette table and metadata (see previous point) collected from the input file:Listed with

gdalinfo, the file has the following metadata:If band and axis metadata has been specified explicitly or harvested automatically from input files during data import (docs), they will be listed under

<ras:bands>and<ras:axes>elements respectively. An axisAwill be a separate element<ras:A>, containing the axis metadata elements; it works the same for the coverage bands. For example, the import ingredients configuration listed in the example here will result in the following DescribeCoverage structure:

5.4.17. GetCoverage request¶

5.4.17.1. Interpolation¶

There are two supported formats for interpolation parameter in WCS GetCoverage requests:

Full URI, e.g.

http://www.opengis.net/def/interpolation/OGC/1.0/bilinearShort hand format, e.g.

bilinear

5.4.18. CRS notation¶

When a CRS is used in WCS / WCPS request for doing subsetting or projecting to an output CRS, these notations below are supported:

Full CRS URL, e.g.

http://localhost:8080/rasdaman/def/crs/EPSG/0/4326(standardized format)Shorthand CRS with authority, version and code, e.g.

EPSG/0/4326Shorthand CRS with authority and code, e.g.

EPSG:4326

5.5. OGC Web Coverage Processing Service (WCPS)¶

The OGC Web Coverage Processing Service (WCPS) standard defines a protocol-independent language for the extraction, processing, analysis, and fusion of multi-dimensional gridded coverages, often called datacubes.

5.5.1. General¶

WCPS requests can be submitted in both abstract syntax:

for c in (test_mean_summer_airtemp)

return

encode(c[Lat(-40:-35), Long(120:121)],

"netcdf",

"{ \"nodata\": [0], \"metadata\": { \"new_metadata\": \"This is a new added metadata\" }, \"formatParameters\": {\"INTERLEAVE\": \"BAND\"} }"

)

and query embedded in XML wrapper elements:

<?xml version="1.0" encoding="UTF-8" ?>

<ProcessCoveragesRequest xmlns="http://www.opengis.net/wcps/1.0" service="WCPS" version="1.0.0">

<query>

<abstractSyntax>

for c in (test_mean_summer_airtemp)

return

count(c[Lat(-43.525:-40), Long(125:130)] > 15) +

count(c[Lat(-43.525:-20), Long(125:150)] < 25)

</abstractSyntax>

</query>

</ProcessCoveragesRequest>

For example, using the WCS GET/KVP protocol binding, a WCPS request can be sent

through the following ProcessCoverages request:

http://localhost:8080/rasdaman/ows?service=WCS&version=2.0.1

&request=ProcessCoverage&query=<wcps-query>

The following subsections list enhancements rasdaman offers over the OGC WCPS standard. A brief introduction to the WCPS language is given in the WCPS cheatsheet; further educational material is available on EarthServer.

5.5.2. Polygon/Raster Clipping¶

The non-standard clip() function enables clipping in WCPS. The signature is

as follows:

clip( coverageExpression, wkt [, subsettingCrs ] )

where

coverageExpressionis an expression of result type coverage, e.g.dem + 10;wktis a valid WKT (Well-Known Text) expression, e.g.POLYGON((...)),LineString(...);subsettingCrsis an optional CRS URL in which thewktcoordinates are expressed, e.g."http://localhost:8080/rasdaman/def/crs/EPSG/0/4326".

5.5.2.1. Clipping Examples¶

Polygon clipping with coordinates in

EPSG:4326on coverage with native CRSEPSG:3857:Linestring clipping on 3D coverage with axes

X,Y,datetime.Linestring clipping on 2D coverage with axes

X,Y.In this case with

WITH COORDINATESextra parameter, the geo coordinates of the values on the linestring will be included as well in the result. The first two bands of the result holds the coordinates (by geo CRS order), and the remaining bands the original cell values. Example output for the above query:"-28.975 119.975 90","-28.975 120.475 84","-28.475 120.975 80", ...

Multipolygon clipping on 2D coverage.

Curtain clipping by a Linestring on 3D coverage

Curtain clipping by a Polygon on 3D coverage

Corridor clipping by a Linestring on 3D coverage

Corridor clipping by a Polygon on 3D coverage (geo CRS:

EPSG:4326) with input geo coordinates inEPSG:3857.

5.5.3. Auto-ratio for spatial scaling¶

The scale() function allows to specify the target extent of only one of the

spatial horizontal axes, instead of requiring both. In such a case, the extent

of the unspecified axis will be determined automatically while preserving the

original ratio between the two spatial axes.

For example in the request below, the extent of Lat will be automatically

set to a value that preserves the ratio in the output result:

5.5.4. Non-scaled axes are optional¶

The scale() function will implicitly add the full domains of unspecified

non-spatial axes of a given coverage, with the effect that they will not be

scaled in the result. This deviates from the OGC WCPS standard, which requires

all axes to be specified with target domains, even if the resolution of an axis

should not be changed in the result.

In the example query below, a 3D coverage is scaled only spatially because only

the spatial axes E and N are specified in the target scale intervals, while the

ansi non-spatial axis is omitted.

5.5.5. Extensions on domain functions¶

The domain interval can be extracted from a domain and imageCrsDomain.

Both the interval - ie: [lowerBound:upperBound] - and lower as well

as upper bound can be retrieved for each axis.

Syntax:

operator(.lo|.hi)?

with .lo or .hi returning the lower bound or upper bound of this interval.

Further, the third argument of the domain() operator, the CRS URL, is

optional. If not specified, domain() will use the CRS of the selected axis

(ie, the second argument) instead.

For example, the coverage AvgLandTemp has 3 dimensions with grid bounding

box of (0:184, 0:1799, 0:3599), and a geo bounding box of

("2000-02-01:2015-06-01", -90:90, -180:180). The table below lists various

expressions and their results:

Expression |

Result |

|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

5.5.6. LET clause¶

An optional LET clause allows binding alias variables to valid WCPS query

sub-expressions; subsequently the alias variables can be used in the return

clause instead of repeating the aliased sub-expressions.

The syntax in context of a full query is as follows:

FOR-CLAUSE

LET $variable := assignment [ , $variable := assignment ]

...

[ WHERE-CLAUSE ]

RETURN-CLAUSE

where

assignment ::= coverageExpression | [ dimensionalIntervalList ]

An example with the first case:

for $c in (test_mr)

let $a := $c[i(0:50), j(0:40)],

$b := avg($c) * 2

return

encode( scale( $c, { imageCrsDomain( $c ) } ) + $b, "image/png" )

The second case allows to conveniently specify domains which can then be readily used in subset expression, e.g:

for $c in (test_mr)

let $dom := [i(20), j(40)]

return

encode( $c[ $dom ] + 10, "itext/json" )

5.5.7. min and max functions¶

Given two coverage expressions A and B (resulting in compatible

coverages, i.e. same domains and types), min(A, B) and max(A, B)

calculate a result coverage with the minimum / maximum for each pair of

corresponding cell values of A and B.

For multiband coverages, bands in the operands must be pairwise compatible; comparison is done in lexicographic order with the first band being most significant and the last being least significant.

The result coverage value has the same domain and type as the input operands.

5.5.8. Positional parameters¶

Positional parameters allow to reference binary or string values in a WCPS

query, which are specified in a POST request in addition to the WCPS query.

Each positional parameter must be a positive integer prefixed by a $, e.g.

$1, $2, etc.

The endpoint to send WCPS query by POST with extra values is:

/rasdaman/ows?SERVICE=WCS&VERSION=2.0.1&REQUEST=ProcessCoverages

with the mandatory parameter query and optional positional parameters 1,

2, etc. The value of a positional parameter can be either a

binary file data or a string value.

5.5.8.1. Example¶

One can use the curl tool to send a WCPS request with

positional parameters from the command line; it will read the contents

of specified files automatically if they are prefixed with a @.

For example, to combine an existing coverage $c with two temporary coverages

$d and $e provided by positional parameters $1 and $2 into a

result encoded in png format (specified by positional parameter $3):

curl -s "http://localhost:8080/rasdaman/ows?SERVICE=WCS&VERSION=2.0.1&REQUEST=ProcessCoverages" \

--form-string 'query=for $c in (existing_coverage), $d in (decode($1)), $e in (decode($2))

return encode(($c + $d + $e)[Lat(0:90), Long(-180:180)], "$3"))' \

-F "1=@/home/rasdaman/file1.tiff" \

-F "2=@/home/rasdaman/file2.tiff" \

-F "3=png" > test.png

Note

It is also possible to submit positional pararameters prefixed with $ character

when it is escaped as \$ in the bash command. For example:

curl ...

-F "\$1=@/home/rasdaman/inputFile1.tiff" \

-F "\$2=25.5"

5.5.9. Decode Operator in WCPS¶

The non-standard decode() operator allows to combine existing coverages with

temporary coverages created in memory from input files attached in the request

body via POST.

Only 2D geo-referenced files readable by GDAL are supported. One way to check if

a file $f is readable by GDAL is with gdalinfo $f. netCDF/GRIB

files are not supported.

5.5.9.1. Syntax¶

The syntax is

decode(${positional_parameter})

where ${positional_parameter) refers to files in the POST request.

See the previous section for more details

on positional parameters.

5.5.9.2. Example¶

5.5.10. Case Distinction¶

Conditional evaluation based on the cell values of a coverage is possible with

the switch expression. Although the syntax is a little different, the

semantics is very much compatible to the rasql case statement, so it is

recommended to additionally have a look at its corresponding

documentation.

5.5.10.1. Syntax¶

SWITCH

CASE condExp RETURN resultExp

[ CASE condExp RETURN resultExp ]*

DEFAULT RETURN resultExpDefault

where condExp and resultExp are either scalar-valued or coverage-valued

expressions.

5.5.10.2. Constraints¶

All

condExpmust return either boolean values or boolean coveragesAll

resultExpmust return either scalar values, or coveragesThe domain of all condition expressions must be the same

The domain of all result expressions must be the same (that means same extent, resolution/direct positions, crs)

5.5.10.3. Evaluation Rules¶

If the result expressions return scalar values, the returned scalar value on a

branch is used in places where the condition expression on that branch evaluates

to True. If the result expressions return coverages, the values of the returned

coverage on a branch are copied in the result coverage in all places where the

condition coverage on that branch contains pixels with value True.

The conditions of the statement are evaluated in a manner similar to the IF-THEN-ELSE statement in programming languages such as Java or C++. This implies that the conditions must be specified by order of generality, starting with the least general and ending with the default result, which is the most general one. A less general condition specified after a more general condition will be ignored, as the expression meeting the less general expression will have had already met the more general condition.

Furthermore, the following hold:

domainSet(result)=domainSet(condExp1)metadata(result)=metadata(condExp1)rangeType(result)=rangeType(resultExp1). In case resultExp1 is a scalar, the result range type is the range type describing the coverage containing the single pixel resultExp1.

5.5.10.4. Examples¶

switch

case $c < 10 return {red: 0; green: 0; blue: 255}

case $c < 20 return {red: 0; green: 255; blue: 0}

case $c < 30 return {red: 255; green: 0; blue: 0}

default return {red: 0; green: 0; blue: 0}

The above example assigns blue to all pixels in the $c coverage having a value less than 10, green to the ones having values at least equal to 10, but less than 20, red to the ones having values at least equal to 20 but less than 30 and black to all other pixels.

switch

case $c > 0 return log($c)

default return 0

The above example computes log of all positive values in $c, and assigns 0 to the remaining ones.

switch

case $c < 10 return $c * {red: 0; green: 0; blue: 255}

case $c < 20 return $c * {red: 0; green: 255; blue: 0}

case $c < 30 return $c * {red: 255; green: 0; blue: 0}

default return {red: 0; green: 0; blue: 0}

The above example assigns blue: 255 multiplied by the original pixel value to all pixels in the $c coverage having a value less than 10, green: 255 multiplied by the original pixel value to the ones having values at least equal to 10, but less than 20, red: 255 multiplied by the original pixel value to the ones having values at least equal to 20 but less than 30 and black to all other pixels.

5.5.11. CIS 1.0 to CIS 1.1 encoding¶

For output format application/gml+xml WCPS supports delivery as CIS 1.1

GeneralGridCoverage by specifying an additional proprietary parameter

outputType in the encode() function, e.g:

for c in (test_irr_cube_2)

return encode( c, "application/gml+xml",

"{\"outputType\":\"GeneralGridCoverage\"}" )

5.5.12. Query Parameter¶

For specifying the WCPS query in a request, in addition to the query

parameter the non-standard q parameter is also supported. A request must

contain only one q or query parameter.

http://localhost:8080/rasdaman/ows?service=WCS&version=2.0.1

&REQUEST=ProcessCoverage&q=<wcps-query>

5.5.13. Describe Operator in WCPS¶

The non-standard describe() function delivers a “coverage description” of a

given coverage without the range set, in either GML or JSON.

5.5.13.1. Syntax¶

describe( coverageExpression, outputFormat [ , extraParameters ] )

where

outputFormatis a string specifying the format encoding in which the result will be formatted. Formats are indicated through their MIME type identifier, just as inencode(). Formats supported:application/gml+xmlorgmlfor GMLapplication/jsonorjsonfor JSON

extraParametersis an optional string containing parameters for fine-tuning the output, just as inencode(). Options supported:"outputType=GeneralGridCoverage"to return a CIS 1.1 General Grid Coverage structure

5.5.13.2. Semantics¶

A describe() operation returns a description of the coverage resulting from

the coverage expression passed, consisting of domain set, range type, and

metadata, but not the range set. As such, this operator is the WCPS equivalent

to a WCS DescribeCoverage request, and the output adheres to the same WCS

schema.

The coverage description generated will follow the coverage’s type, so one of Rectified Grid Coverage (CIS 1.0), ReferenceableGridCoverage (CIS 1.0), or General Grid Coverage (CIS 1.0).

By default, the coverage will be provided as Rectified or Referenceable Grid

Coverage (in accordance with its type); optionally, a General Grid Coverage can

be generated instead through "outputType=GeneralGridCoverage". As JSON is

supported only from OGC CIS 1.1 onwards this format is only available (i) if the

coverage is stored as a CIS 1.1 General Grid Coverage (currently not supported)

or (ii) this output type is selected explicitly through an extraParameter.

Efficiency: The describe() operator normally does not materialize

the complete coverage, but determines only the coverage description making

this function very efficient. A full evaluation is only required

if coverageExpression contains a clip() performing a curtain, corridor,

or linestring operation.

5.5.13.3. Examples¶

Determine coverage description as a CIS 1.0 Rectified Grid Coverage in GML, without evaluating the range set:

for $c in (Cov) return describe( $c.red[Lat(10:20), Long(30:40), "application/gml+xml" )

Deliver coverage description as a CIS 1.1 General Grid Coverage in GML, where range type changes in the query:

for $c in (Cov) return describe( { $c.red; $c.green; $c.blue }, "application/gml+xml", "outputType=GeneralGridCoverage" )Deliver coverage description as a CIS 1.1 General Grid Coverage, in JSON:

for $c in (Cov) return describe( $c, "application/json", "outputType=GeneralGridCoverage" )

5.5.13.4. Specific Exceptions¶

Unsupported output format

This format is only supported for General Grid Coverage

Illegal extra parameter

5.5.14. Flip Operator in WCPS¶

The non-standard FLIP function enables reversing values from an axis

belonging to a coverage expression. The output coverage expression

has no changes in the grid domains, base type and dimensionality.

If encoded in gml format, then in gml:sequenceRule element,

the flipped axis shows its value with minus sign

(e.g. -1 instead of +1).

See more details in rasql.

Syntax

flipExp: FLIP coverageExpression ALONG axisLabel

axisLabel: identifier

A FLIP expression consists of coverageExpression which denotes the input coverage,

and one axisLabel of the coverage to flip values.

Examples

The following examples illustrate the syntax of the FLIP operator.

Flipping the 2D coverage expression on its

Longaxis, by using:for $c in (test_mean_summer_airtemp) return encode( FLIP $c[Lat(-30:-15), Long(125:145)] ALONG Long , "image/png")Flipping the 3D coverage expression on its

unixtime axis, by using:for $c in (test_wms_3d_time_series_irregular) return encode( FLIP $c[Lat(40:90), Long(80:140)] + 20 ALONG unix , "json")

5.5.15. Sort Operator in WCPS¶

The SORT operator enables the user to sort a coverage expression along an axis.

The sorting is done by slicing the array of the coverage along that axis, calculating a slice rank

for each of the slices, and then rearranging the slices according to their ranks,

in an ascending or descending order.

The sorting causes no change in the spatial domain, base type, or dimensionality. This means that the resulting array is the original array but with its values sorted at the sorting axis. See more details in rasql.

Note

After sorting, the geo domains (and coefficients for irregular axis) of the sorted axis are not changed, even though the grid values associated with geo coordinates are changed.

Syntax

sortExp: SORT coverageExp ALONG sortAxis [listingOrder] BY cellExp

coverageExp: a general coverage expression

sortAxis: identifier.

listingOrder: ASC (default if omitted) | DESC

cellExp: an expression that produces scalar ranks for each slice

along the sortAxis.

Note

One should not do subset (slice/trim) on the sortAxis in the cellExp

Examples

The following examples illustrate the syntax of the SORT operator.

Sort the 2D coverage expression on its

Lonaxis according to the coverage values at each longitude index and -40 latitude in ascending order:for $c in (test_mean_summer_airtemp) return encode( SORT $c ALONG Lon BY $c[Lat(-40)] , "image/png")Sort the 3D coverage expression on its

unixtime axis in descending order by the sum of each time slice along it:for $c in (test_wms_3d_time_series_irregular) return encode( SORT $c.Red + 30 ALONG unix DESC BY add($c) , "json")

5.5.16. Calendar capabilities¶

Since v10.3, rasdaman supports quite flexible and powerful methods for addressing temporal coordinates in WCS / WCPS subsetting and other operations. A common use case is aggregating data over a time series per temporal unit, e.g. per day, month, year, etc.

5.5.16.1. Temporal coordinates¶

Temporal coordinates must be specified in ISO datetime format; the full format

including all components is YYYY-MM-DDTHH:MM:SS.SSSZ, explained as follows:

YYYY: yearMM: monthDD: dayT: separator between date and time componentsHH: hourSS: secondSSS: milisecondZ: UTC timezone (GMT +0); imported coverages has a fixed timezone UTC currently, there is no support to change to different timezone when importing data

Not all components must be specified: at minimum YYYY is required.

The last component in the datetime value determines its granularity; for

example, the granularity of "2015-01-02" is day, while "2015-02" has

granularity month. The granularity modifies the range of a datetime string in

a subset. For example, the datetime value "2015-01" with

granularity month has a time range from lower bound

"2015-01-01T00:00:00:000Z" (first moment of January, 2015) to upper bound

"2015-01-31T23:59:59:999Z" (last moment of January, 2015).

5.5.16.2. Shifting temporal coordinates¶

It is possible to add or subtract a time period from a datetime value, thereby shifting the granularity as well. The shift period is specified separated by a whitespace after the datetime string. It is composed of several parts in sequence:

Initial designator

P(for Period): requiredNumber of years followed by

YNumber of months followed by

MNumber of days followed by

DTime designator (separator)

Trequired only if any time components are specifiedNumber of hours followed by

HNumber of minutes followed by

MNumber of seconds followed by

S

If any number is negative then the preceding datetime is shifted backward instead of forward as usual; non-required parts can be omitted.

For example, time("2015-01-01 P2Y") shifts the input datetime forward by 2

years to time("2017-01-01").

5.5.16.3. Concatenating time components¶

Individual time components can be concatenated into a full datetime string with

the . operator. Each component is either a string or a temporal function

which returns a string. For example time("2015" . "01" . "01") is a

slice which will be resolved as time("2015-01-01").

5.5.16.4. Temporal subsets¶

The semantics of slices and trims in temporal subsets is clarified subsequently.

Slicing generally selects a single index on a coverage axis. In temporal slices, however, we have to keep in mind the granularity of the datetime value.

If the time range defined by the granularity of the slice coordinate encompases exactly one grid index, then this index is returned.

Otherwise an error is returned, if it does not contain any grid index or contains more than one index. In this case it may be necessary to adjust the slicing to one with larger or smaller granularity, e.g. from

"2015-01"with month granularity to"2015-01-01"with day granularity.

Trimming corresponds to selecting all the indices between a lower and upper

bounds. On a temporal axis, the lower bound is converted to the full ISO

datetime format as before, while the upper bound is converted up to the last

moment of the granularity of the datetime value. For example, a trim

time("2015-01-01":"2015-01-03") is first expanded internally to

time("2015-01-01T00:00:00.000Z":"2015-01-03T23:59:59.999Z")

before it is used to subset the time axis.

For example, selecting only data in January 2023 could be done with

time("2023-01":"2023-01"); note that it is not necessary to specify any

further time components, e.g. day.

5.5.16.5. Time axis iterator¶

Coverage constructors and condensers have an OVER clause where iterator

variables over the coordinates of a coverage axis (potentially a subset) can be

specified. In case of a temporal axis, lists of temporal coordinates are built

from coverage domain information or time string literals. Afterwards, when the

constructor or condenser are evaluated, the iterator variable goes over the

list in sequence.

There are two ways to specify the temporal coordinates for iteration:

iterVar axis( "lowerBound" : "upperBound" [ : "step" ] )Here

lowerBoundandupperBoundare datetime values. Thestepis an optional parameter with same format as specified earlier in Shifting temporal coordinates, which indicates that theiterVarsteps from the lower to the upper bound instepincrements. Ifstepis omitted, then it is derived from the granularity of thelowerBound. For example,over $pt date("2014" : "2023" : "P1Y" )is identical toover $pt date("2014" : "2023"), as the granularity of the lower bound isP1Y; the iterated time coordinates will be"2014","2015", …,"2023".iterVar axis( "dateTime1", "dateTime2", ... )Here

iterVargoes through a list of explicitly specified datetime values. For example, this query will build a coverage of maximum values of the data slices at days explicitly listed in theoverclause:for c in (testCov) return encode( coverage result over $pt t("2023-01-01", "2023-01-02", "2023-01-03") values max ( c[t($pt : $pt)] ) , "csv")iterVar axis( timeTruncator(...) )The set of coordinates to iterate through is in this case generated by a time truncator function.

5.5.16.6. Time truncator functions¶

Time truncators allow to extract the actually present time coordinates, at a particular granularity, from a particular coverage under inspection. They are used typically in axis iterators of coverage constructor / general condenser.

They are a family of functions tr: list<datetime> -> list<datetime> which

reduce accuracy beyond the chosen granularity from all time stamps passed and

returns a set without duplicated values of matched datetimes; tr is one of

allyears, allmonths, alldays, allhours, allminutes, allseconds.

If $c is a coverage alias in a for clause, and its axis time extends

from 2022-11-01 to 2023-03-31, then:

allyears($c.domain.date)="2022","2023"allmonths($c.domain.date)="2022-11", …,"2023-03"alldays($c.domain.date)="2022-11-01", …,"2023-03-31"allhours($c.domain.date)="2022-11-01T00", …,"2023-03-30T23","2023-03-31T00"allminutes($c.domain.date)="2022-11-01T00:00", …,"2023-03-30T23:59","2023-03-31T00:00"allseconds($c.domain.date)="2022-11-01T00:00:00.000", ..,"2023-03-30T23:59.999","2023-03-31T00:00.000"

To iterate through all Januars in possible years on the time axis of a

coverage, we can write a query as follows:

for $c in (test_365_days_irregular)

return encode(

coverage result

over $pt date( allmonths( domain($c, time) ) )

values $c[date($pt . "-01" : $pt . "-01")],

"json")

Here, allyears( domain($c, time) ) may return a list of "2022" and

"2023"; then for each $pt, date($pt . "-01" : $pt . "-01") will be

resolved as:

First iteration:

date("2022-01" : "2022-01")Second iteration:

date("2023-01" : "2023-01")

5.5.16.7. Time extractor functions¶

Time extractors allow to extract time components by a specified granularity in the used function name. They are used typically in axis iterators of coverage constructor / general condenser.

They are a family of functions s: list<datetime> -> list<numbers> which

return a set without duplicated values of time components contained in the

input list; s is one of years, months, days, hours, minutes, seconds.

If $c is a coverage alias in a for clause, and its axis time extends

from 2022-11-01 to 2023-03-31, then:

years(domain($c, time))="2022","2023"months(domain($c, time))="01","02","03","11","12"days(domain($c, time))="01", …,"31"hours(domain($c, time))="00", …,"23"minutes(domain($c, time))="00", …,"59"second(domain($c, time))="00.000", ..,"59.999"

For example, if the time axis is irregular with two indexes at

"2023-01-01" and "2023-08-01", then months( domain($c, time) ) in

the query below returns "01" and "08", and the iterated subsets in

date("2023-" . $m) will be "2023-01" and "2023-08":

for $c in (test_cov) return encode( coverage temp_cov OVER $m date( months( domain($c, time) ) ) VALUES $c[date("2023-" . $m)], "csv")

Another example: the time axis has daily coefficients over years 2020, 2021,

2022, 2023; this query will return all coefficients in February 2020:

for $c in (test_cov) return encode( coverage temp_cov OVER $d date( days( domain($c[time("2020-02":"2020-02")], time) ) ) VALUES $c[date("2020-02-" . $d)], "csv")

Here, days( domain($c[time("2020-02":"2020-02")], time) returns

a set of 01","02",...,"29", and for each $d in the set

date("2020-02-" . $d) will be resolved as:

First iteration:

date("2020-02-01")Second iteration:

date("2020-02-02)…

Last iteration:

date("2020-02-29)

5.5.16.8. Incompatibilites¶

Prior to this calendar feature, subsets on a temporal axis is done like below:

Slice: e.g.

time("2015-01-01"), then this value is converted to ISO datetime format"2015-01-01T00:00:00.000Z"and the slice is applied on thetimeaxis. If this axis is irregular and it does not contain the coefficient at the above exact datetime, then petascope throws an exception because the coefficient is not found.Trim: e.g.

time("2015-01":"2015-12"), then the subset is converted to ISO datetime format as"2015-01-01T00:00:00:000Z":"2015-12-01T00:00:00:000Z"and iftimeaxis is irregular, then petascope will find any coefficients between these subsets and return them.

5.5.17. Polygonize function¶

This operation is useful in geographical context, providing ability to layer additional information on existing maps, for example. For more details, see also rasql polygonize.

When the result includes multiple files, as is the case with ESRI Shapefile,

the files will be compressed into a single zip archive.

Syntax

polygonize(covExp, targetFormat)

polygonize(covExp, targetFormat, connectedness)

Where

covExp: coverage expression

targetFormat: StringLit

connectedness: integerLit

Examples

The following WCPS query vectorizes a 2D geo-referenced coverage into shape file format:

for $c in (test_mean_summer_airtemp) return polygonize($c, "ESRI Shapefile")

5.5.18. Concat operator in WCPS¶

The non-standard concat operator allows to glue coverage expressions

together along an axis to a coverage expression with a larger grid domain.

It is equivalent to concat in rasql with the same requirements

for grid domains of the contributing coverage expressions.

The result is a grid coverage without any geo-referencing metadata. The

concatenation axis in the along clause must exist in all concatenated

operand coverages.

Example

The following WCPS query concatenates two 1-D coverages

along axis j, creating a larger 1-D coverage:

for $c in (test_mr) return encode( concat $c[i(0)] with $c[i(1)] along j , "json")

5.6. OGC Web Map Service (WMS)¶

The OGC Web Map Service (WMS) standard provides a simple HTTP interface for requesting overlays of geo-registered map images, ready for display.

With petascope, geo data can be served simultaneously via WMS, WMTS, WCS, and WCPS. Further information:

This section mainly covers rasdaman extensions of the OGC WMS standard.

5.6.1. GetMap extensions¶

5.6.1.1. Transparency and background color¶

By adding a parameter transparent=true to WMS requests the returned image

will have NoData Value=0 in the metadata indicating to the client

that all pixels with value 0 value should be considered transparent for PNG

encoding format. Example:

When transparent=false or omitted in a WMS GetMap request, by default

the response has white color for no-data pixels. To colorize no-data pixels the

GetMap request should specify BGCOLOR=<hexcolor>, where <hexcolor>

is in format 0xRRGGBB, e.g. 0x0000FF for blue color:

Note

BGCOLORis valid only with a layer containing 1, 3 or 4 bands.

BGCOLORdoes not work together with range constructor defined in a WMS style via rasql / WCPS fragments.

BGCOLORis ignored whentransparent=true.

5.6.1.2. Interpolation¶

If in a GetMap request the output CRS requested is different from the

coverage’s native CRS, petascope will duly reproject the map applying

resampling and interpolation. The algorithm used can be controlled with the

non-standard GetMap parameter interpolation=${method}; default is

nearest-neighbour interpolation. See Geographic projection for the methods

available and their meaning. Example:

5.6.1.3. Random parameter¶

Normally, Web Browser cache the WMS requests from a WMS client (e.g. WebWorldWind).

In order to bypass that, one needs to add append extra parameter random with its

value equals to a random number for all WMS GetMap requests. For example:

In petascope, this random parameter is stripped when petascope

receives a W*S request containing this parameter, hence, if the request is already processed,

the result stored in the cache will be returned as usual.

5.6.2. nD Coverages as WMS Layers¶

Petascope allows to import a 3D+ coverage as a WMS layer. To this end, the

ingredients file used for wcst_import must contain wms_import": true.

For 3D+ coverages this works with recipes regular_time_series,

irregular_time_series, and general_coverage.

This ingredients file below for wcst_import.sh tool

shows how to define an irregular_time_series 3D coverage from 2D TIFF files.

Once the coverage is created, GetMap requests can use the additional

(non-horizontal) axes for subsetting according to the OGC WMS 1.3.0 standard.

Axis Type |

Subset parameter |

|

|---|---|---|

Time |

time=… |

|

Elevation |

elevation=… |

|

Other |

dim_AxisName=… (e.g dim_pressure=…) |

|

According to the WMS 1.3.0 specification, the subset for non-geo-referenced axes can have this format to slice on a specific value, for example:

TIME='2012-01-01T00:01:20Z'&dim_pressure=20

TIME parameter does not support durations

as part of slicing value.

A GetMap request always returns a 2D result. If a non-geo-referenced axis is

omitted from the request it will be considered as a slice on the upper bound

along this axis. For example, in a time-series the most recent timeslice will be

delivered.

Examples:

Multiple values on time axis of a 3d coverage:

http://localhost:8080/rasdaman/ows?service=WMS& version=1.3.0& request=GetMap& layers=test_wms_3d_time_series_irregular& bbox=30,34,31,35& width=80& height=60& styles=& crs=EPSG:4326& format=image/png& time="2015-02-01T00:00:00.000Z","2015-03-01T00:00:00.000Z","2015-04-01T00:00:00.000Z","2015-05-01T00:00:00.000Z" &TRANSPARENT=TRUEMultiple values on time and dim_pressure axes of a 4d coverage:

http://localhost:8080/rasdaman/ows?service=WMS& version=1.3.0& request=GetMap& layers=test_wms_4d_ecmwf_fire_netcdf& bbox=34.5,29.7,34.7,29.8& width=80& height=60& styles=& crs=EPSG:4326& format=image/png& dim_forecast=8,15,22& time="2017-02-10T00:00:00.000Z","2017-12-15T00:00:00.000Z","2017-09-18T00:00:00.000Z" &TRANSPARENT=TRUE

5.6.3. GetMap with customized variables for a style¶

WMS GetMap allows adding vendor-specific parameters as key-value parameters

when a style containing a WCPS or rasql query fragment that uses these variables

is requested. The key parameter must have a prefix @, and must appear in

the style’s query fragment, as well as in the GetMap request URL.

For example, the following WCPS query fragment style contains user-defined

parameters @minYear, @maxYear and @totalYears:

(condense +

over $t year(@minYear:@maxYear)

using $c[year($t), model(2), scenario(2)])

/

@totalYears

A GetMap request using this style must specify values for the custom parameters

with key=value pairs in the URL, e.g:

http://localhost:8080/rasdaman/ows?SERVICE=WMS&VERSION=1.3.0

&REQUEST=GetMap&LAYERS=testLayer&STYLES=testStyle

&BBOX=618887,3228196,690885,3300195.0

&CRS=EPSG:32615&WIDTH=600&HEIGHT=600&FORMAT=image/png

&TRANSPARENT=true

&@minYear=2003

&@maxYear=2005

&@totalYears=3

Note

User-defined parameters in the style must be provided as KVP parameters in the

GetMaprequest.A

GetMaprequest must not contain parameters that have not been defined in the corresponding style.

5.6.4. GetLegendGraphic request¶

WMS GetLegendGraphic allows to get a legend PNG/JPEG image

associated with a style of a layer. Admin can set a legend image

for a style via a style creation request.

Required request parameters:

format- data format in which the legend image is returned; onlyimage/pngandimage/jpegare supported.layer- the WMS layer which contains the specified style.style- the style which contains the legend image.Note

Any further extra parameters will be ignored by rasdaman.

This request, for example, will return the legend image for style color of layer cov1:

http://localhost:8080/rasdaman/ows?service=WMS&request=GetLegendGraphic

&format=image/png&layer=cov1&style=color

When a style of a layer has an associated legend graphic, WMS GetCapabilities

will have an additional <LegendURL> XML section for this style. For example:

5.6.5. Layer Management¶

Non-standard API for WMS layer management are listed below.

Layers can be easily created from existing WCS coverages in two ways:

By enabling this during coverage import in the ingredients file with the wms_import option;

By manually sending an /rasdaman/admin/layer/activate HTTP request to petascope

Create a new WMS layer from an existing coverage

MyCoverage:/rasdaman/admin/layer/activate?COVERAGEID=MyCoverage

During coverage import this can be done with the wms_import option in the ingredients file.

Remove a WMS layer directly:

/rasdaman/admin/layer/deactivate&COVERAGEID=MyLayer

Indirectly a layer will be removed when deleting the associated WCS coverage

5.6.6. Style Behavior¶

When a client sends GetMap requests, the rules below define

(in conformance with the WMS 1.3 standard) how a style is applied

to the requested layers:

If no styles are defined then rasdaman returns the data as-is, encoded in the requested format.

If some styles are defined, e.g. X, Y, and Z, then:

If the client specifies a style Y, then Y is applied.

If the client does not specify a style, then:

If the admin has set a style as default, e.g. Z, then Z is applied.